Natural Language Generation (NLG) is a kind of AI that is capable of generating human language from structured data. It is closely related to Natural Language Processing (NLP) but has a clear distinction.

To put it in simple words, NLP allows the computer to read, and NLG to write. This is a fast-growing field, which allows computers to understand the way we communicate. Currently, it is used for writing suggestions, such as autocompletion of emails, and even on producing human-readable texts without any human intervention.

In this article, you will learn in practice how this process works, and how you can generate artificial news headlines with just a few lines of code, which are indistinguishable from real ones. Read More

Daily Archives: January 20, 2021

How MLOps Can Help Get AI Projects to Deployment

Did you know that most AI projects never get fully deployed? In fact, a recent survey by NewVantage Partners revealed that only 15% of leading enterprises have gotten any AI into production at all. Unfortunately, many models get built and trained, but never make it to business scenarios where they can provide insights and value. This gap – deemed the production gap – leaves models unable to be used, wastes resources and stops AI ROI in its tracks. But it’s not the technology that is holding things back. In most cases, the barriers to businesses and organizations becoming data-driven can be reduced to three things: people, process and culture. So, the question is, how can we overcome these challenges and start getting real value from AI? To overcome this production gap and finally get ROI from their AI, enterprises must consider formalizing an MLOps strategy.

MLOps, or machine learning operations, refers to the culmination of people, processes, practices and underpinning technologies that automate the deployment, monitoring and management of machine learning models into production in a scalable, fully governed way. Read More

How Mirroring the Architecture of the Human Brain Is Speeding Up AI Learning

While AI can carry out some impressive feats when trained on millions of data points, the human brain can often learn from a tiny number of examples. New research shows that borrowing architectural principles from the brain can help AI get closer to our visual prowess.

The prevailing wisdom in deep learning research is that the more data you throw at an algorithm, the better it will learn.

… This prompted a pair of neuroscientists to see if they could design an AI that could learn from few data points by borrowing principles from how we think the brain solves this problem. In a paper in Frontiers in Computational Neuroscience, they explained that the approach significantly boosts AI’s ability to learn new visual concepts from few examples. Read More

Von Neumann Is Struggling

In an era dominated by machine learning, the von Neumann architecture is struggling to stay relevant.

The world has changed from being control-centric to one that is data-centric, pushing processor architectures to evolve. Venture money is flooding into domain-specific architectures (DSA), but traditional processors also are evolving. For many markets, they continue to provide an effective solution.

The von Neumann architecture for general-purpose computing was first described in 1945 and stood the test of time until the turn of the Millennium. … Scalability slowed around 2000. Then, Dennard scaling reared its head in 2007 and power consumption became a limiter. While the industry didn’t recognize it at the time, that was the biggest inflection point in the industry to date. It was the end of instruction-level parallelism. Read More

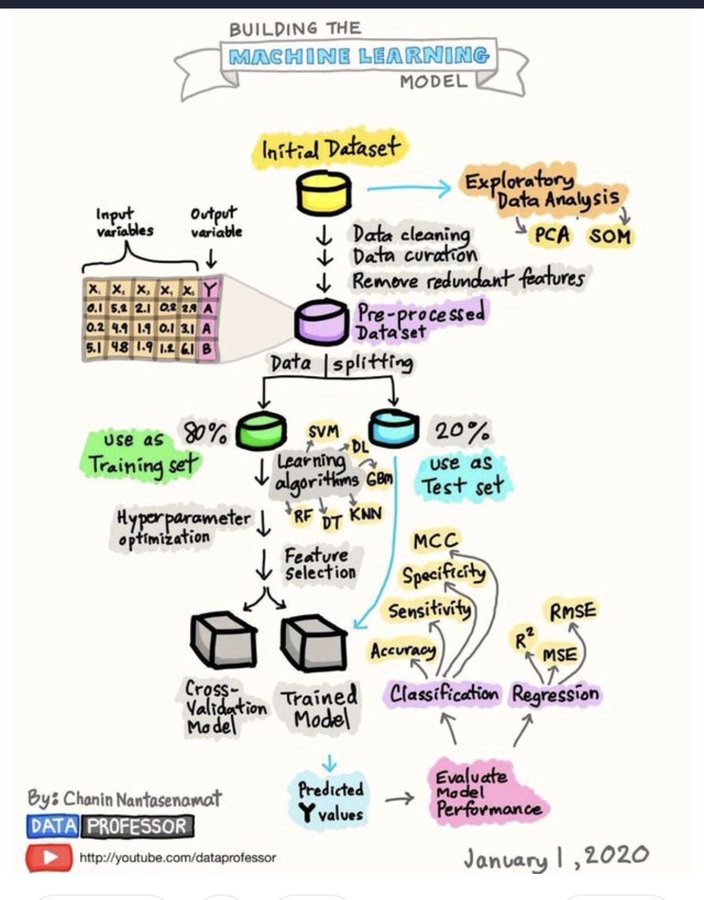

Data Professor

New weekly videos on Data Science, AI, Big Data, Machine Learning, and Bioinformatics.