The infamous “trolley problem” was put to millions of people in a global study, revealing how much ethics diverge across cultures.

In 2014 researchers at the MIT Media Lab designed an experiment called Moral Machine. The idea was to create a game-like platform that would crowdsource people’s decisions on how self-driving cars should prioritize lives in different variations of the “trolley problem.” In the process, the data generated would provide insight into the collective ethical priorities of different cultures.

… A new paper published in Nature presents the analysis of that data and reveals how much cross-cultural ethics diverge on the basis of culture, economics, and geographic location. Read More

Daily Archives: January 21, 2021

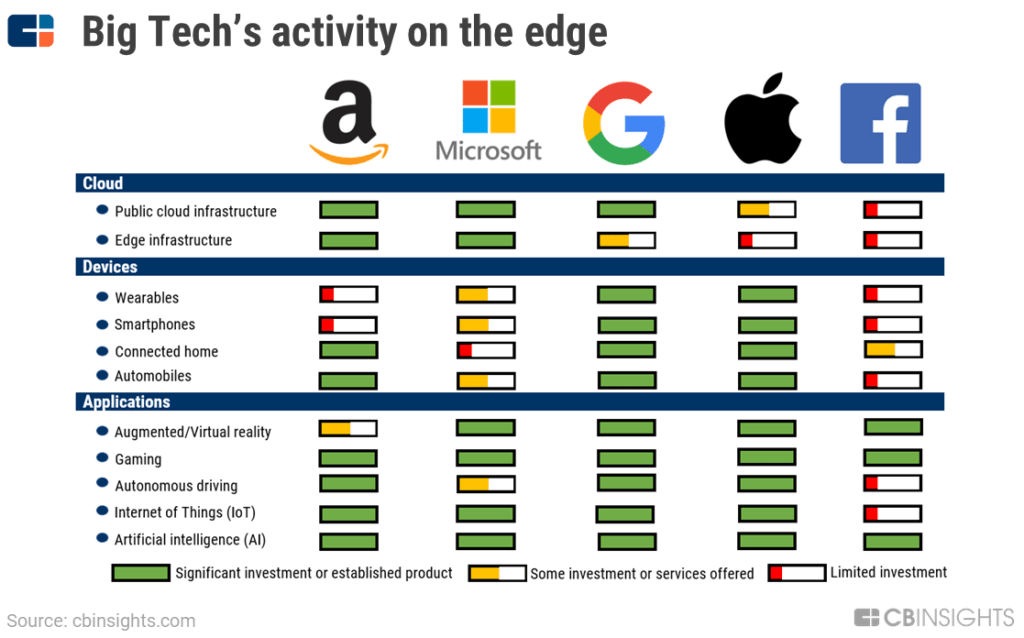

Big Tech In Edge Computing

Companies are addressing issues related to language with AI

Artificial intelligence (AI) has been impacting not only human lives but also various industries. Its tools, like deep learning, can increasingly teach themselves how to perform complex tasks. Besides, self-driving cars are about to hit the streets, and diseases are being treated using AI technology.

Yet despite these impressive advances, one fundamental capability remains elusive: language. Systems like Siri, Amazon’s Alexa and IBM’s Watson can follow simple spoken or typed commands and answer basic questions, but they can’t hold a conversation and have no real understanding of the words they use. If artificial intelligence is to be truly transformative, this must change. Read More

The past, present and future of deep learning

TLDR; In this blog, you’ll be learning the theoretical aspects of deep learning (DL) and how it has evolved, right from the study of the human brain to building complex algorithms. Next, you’ll be looking at a few pieces of research that have been carried by renowned deep learning folks who have then sown the sapling in the fields of DL which has now grown into a gigantic tree. Lastly, you’ll be introduced to the applications and the areas where deep learning has set a strong foothold. Read More

Gun Detection AI is Being Trained With Homemade ‘Active Shooter’ Videos

Companies are using bizarre methods to create algorithms that automatically detect weapons. AI ethicists worry they will lead to more police violence.

In Huntsville, Alabama, there is a room with green walls and a green ceiling. Dangling down the center is a fishing line attached to a motor mounted to the ceiling, which moves a procession of guns tied to the translucent line.

The staff at Arcarithm bought each of the 10 best-selling firearm models in the U.S.: Rugers, Glocks, Sig Sauers. Pistols and long guns are dangled from the line. The motor rotates them around the room, helping a camera mounted to a mobile platform photograph them from multiple angles. “ Read More

Learning Transferable Visual Models From Natural Language Supervision

State-of-the-art computer vision systems are trained to predict a fixed set of predetermined object categories. This restricted form of super-vision limits their generality and usability since additional labeled data is needed to specify any other visual concept. Learning directly from raw text about images is a promising alternative which leverages a much broader source of supervision.We demonstrate that the simple pretraining task of predicting which caption goes with which image is an efficient and scalable way to learn SOTA image representations from scratch on a dataset of 400 million (image, text) pairs collected from the internet. After pretraining, natural language is used to reference learned visual concepts (or describe new ones) enabling zero-shot transfer of the model to downstream tasks. We study the performance of this approach by benchmarking on over 30 different existing computer vision datasets, spanning tasks such as OCR, action recognition in videos, geo-localization, and many types of fine-grained object classification. The model transfers non-trivially to most tasks and is often competitive with a fully supervised baseline without the need for any dataset specific training.For instance, we match the accuracy of the original ResNet-50 on ImageNet zero-shot without needing to use any of the 1.28 million training examples it was trained on. Read More

#image-recognition, #nlpLarge-Scale Adversarial Training for Vision-and-Language Representation Learning

We present VILLA, the first known effort on large-scale adversarial training for vision-and-language (V+L) representation learning.VILLA consists of two training stages: (i) task-agnostic adversarial pretraining; followed by (ii) task-specific adversarial fine tuning. Instead of adding adversarial perturbations on image pixels and textual tokens, we propose to perform adversarial training in the embedding space of each modality. To enable large-scale training, we adopt the “free” adversarial training strategy, and combine it with KL-divergence-based regularization to promote higher invariance in the embedding space. We apply VILLA to current best-performing V+L models, and achieve new state of the art on a wide range of tasks, including Visual Question Answering, Visual Commonsense Reasoning,Image-Text Retrieval, Referring Expression Comprehension, Visual Entailment,and NLVR. Read More