We present VILLA, the first known effort on large-scale adversarial training for vision-and-language (V+L) representation learning.VILLA consists of two training stages: (i) task-agnostic adversarial pretraining; followed by (ii) task-specific adversarial fine tuning. Instead of adding adversarial perturbations on image pixels and textual tokens, we propose to perform adversarial training in the embedding space of each modality. To enable large-scale training, we adopt the “free” adversarial training strategy, and combine it with KL-divergence-based regularization to promote higher invariance in the embedding space. We apply VILLA to current best-performing V+L models, and achieve new state of the art on a wide range of tasks, including Visual Question Answering, Visual Commonsense Reasoning,Image-Text Retrieval, Referring Expression Comprehension, Visual Entailment,and NLVR. Read More

Monthly Archives: January 2021

Natural Language Generation (Practical Guide)

Natural Language Generation (NLG) is a kind of AI that is capable of generating human language from structured data. It is closely related to Natural Language Processing (NLP) but has a clear distinction.

To put it in simple words, NLP allows the computer to read, and NLG to write. This is a fast-growing field, which allows computers to understand the way we communicate. Currently, it is used for writing suggestions, such as autocompletion of emails, and even on producing human-readable texts without any human intervention.

In this article, you will learn in practice how this process works, and how you can generate artificial news headlines with just a few lines of code, which are indistinguishable from real ones. Read More

How MLOps Can Help Get AI Projects to Deployment

Did you know that most AI projects never get fully deployed? In fact, a recent survey by NewVantage Partners revealed that only 15% of leading enterprises have gotten any AI into production at all. Unfortunately, many models get built and trained, but never make it to business scenarios where they can provide insights and value. This gap – deemed the production gap – leaves models unable to be used, wastes resources and stops AI ROI in its tracks. But it’s not the technology that is holding things back. In most cases, the barriers to businesses and organizations becoming data-driven can be reduced to three things: people, process and culture. So, the question is, how can we overcome these challenges and start getting real value from AI? To overcome this production gap and finally get ROI from their AI, enterprises must consider formalizing an MLOps strategy.

MLOps, or machine learning operations, refers to the culmination of people, processes, practices and underpinning technologies that automate the deployment, monitoring and management of machine learning models into production in a scalable, fully governed way. Read More

How Mirroring the Architecture of the Human Brain Is Speeding Up AI Learning

While AI can carry out some impressive feats when trained on millions of data points, the human brain can often learn from a tiny number of examples. New research shows that borrowing architectural principles from the brain can help AI get closer to our visual prowess.

The prevailing wisdom in deep learning research is that the more data you throw at an algorithm, the better it will learn.

… This prompted a pair of neuroscientists to see if they could design an AI that could learn from few data points by borrowing principles from how we think the brain solves this problem. In a paper in Frontiers in Computational Neuroscience, they explained that the approach significantly boosts AI’s ability to learn new visual concepts from few examples. Read More

Von Neumann Is Struggling

In an era dominated by machine learning, the von Neumann architecture is struggling to stay relevant.

The world has changed from being control-centric to one that is data-centric, pushing processor architectures to evolve. Venture money is flooding into domain-specific architectures (DSA), but traditional processors also are evolving. For many markets, they continue to provide an effective solution.

The von Neumann architecture for general-purpose computing was first described in 1945 and stood the test of time until the turn of the Millennium. … Scalability slowed around 2000. Then, Dennard scaling reared its head in 2007 and power consumption became a limiter. While the industry didn’t recognize it at the time, that was the biggest inflection point in the industry to date. It was the end of instruction-level parallelism. Read More

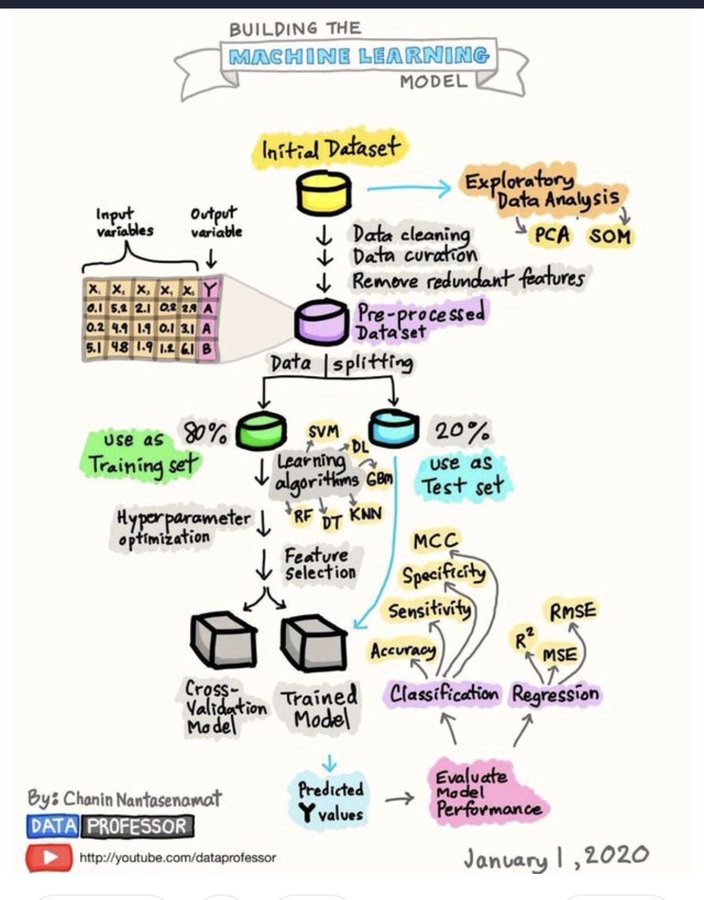

Data Professor

New weekly videos on Data Science, AI, Big Data, Machine Learning, and Bioinformatics.

Forget coding, you can now solve your AI problems with Excel

Machine learning and deep learning have become an important part of many applications we use every day. There are few domains that the fast expansion of machine learning hasn’t touched. …But mastering machine learning is a difficult process. You need to start with a solid knowledge of linear algebra and calculus, master a programming language such as Python, and become proficient with data science and machine learning libraries such as Numpy, Scikit-learn, TensorFlow, and PyTorch. And if you want to create machine learning systems that integrate and scale, you’ll have to learn cloud platforms such as Amazon AWS, Microsoft Azure, and Google Cloud.

… To most people, MS Excel is a spreadsheet application that stores data in tabular format and performs very basic mathematical operations. But in reality, Excel is a powerful computation tool that can solve complicated problems. Excel also has many features that allow you to create machine learning models directly into your workbooks. Read More

Implicit coordination for 3D underwater collective behaviors in a fish-inspired robot swarm

Many fish species gather by the thousands and swim in harmony with seemingly no effort. Large schools display a range of impressive collective behaviors, from simple shoaling to collective migration and from basic predator evasion to dynamic maneuvers such as bait balls and flash expansion. A wealth of experimental and theoretical work has shown that these complex three-dimensional (3D) behaviors can arise from visual observations of nearby neighbors, without explicit communication. By contrast, most underwater robot collectives rely on centralized, above-water, explicit communication and, as a result, exhibit limited coordination complexity. Here, we demonstrate 3D collective behaviors with a swarm of fish-inspired miniature underwater robots that use only implicit communication mediated through the production and sensing of blue light. We show that complex and dynamic 3D collective behaviors—synchrony, dispersion/aggregation, dynamic circle formation, and search-capture—can be achieved by sensing minimal, noisy impressions of neighbors, without any centralized intervention. Our results provide insights into the power of implicit coordination and are of interest for future underwater robots that display collective capabilities on par with fish schools for applications such as environmental monitoring and search in coral reefs and coastal environments. Read More

Facial recognition technology can expose political orientation from naturalistic facial images

Ubiquitous facial recognition technology can expose individuals’ political orientation, as faces of liberals and conservatives consistently differ. A facial recognition algorithm was applied to naturalistic images of 1,085,795 individuals to predict their political orientation by comparing their similarity to faces of liberal and conservative others. Political orientation was correctly classified in 72% of liberal–conservative face pairs, remarkably better than chance (50%), human accuracy (55%), or one afforded by a 100-item personality questionnaire (66%). Accuracy was similar across countries (the U.S., Canada, and the UK), environments (Facebook and dating websites), and when comparing faces across samples. Accuracy remained high (69%) even when controlling for age, gender, and ethnicity. Given the widespread use of facial recognition, our findings have critical implications for the protection of privacy and civil liberties. Read More

Jumbled-up sentences show that AIs still don’t really understand language

Many AIs that appear to understand language and that score better than humans on a common set of comprehension tasks don’t notice when the words in a sentence are jumbled up, which shows that they don’t really understand language at all. The problem lies in the way natural-language processing (NLP) systems are trained; it also points to a way to make them better.

Researchers at Auburn University in Alabama and Adobe Research discovered the flaw when they tried to get an NLP system to generate explanations for its behavior, such as why it claimed different sentences meant the same thing. When they tested their approach, they realized that shuffling words in a sentence made no difference to the explanations. “This is a general problem to all NLP models,” says Anh Nguyen at Auburn University, who led the work. Read More