Human intelligence emerges from our combination of senses and language abilities. Maybe the same is true for artificial intelligence.

In late 2012, AI scientists first figured out how to get neural networks to “see.” They proved that software designed to loosely mimic the human brain could dramatically improve existing computer-vision systems. The field has since learned how to get neural networks to imitate the way we reason, hear, speak, and write.

But while AI has grown remarkably human-like—even superhuman—at achieving a specific task, it still doesn’t capture the flexibility of the human brain. We can learn skills in one context and apply them to another. By contrast, though DeepMind’s game-playing algorithm AlphaGo can beat the world’s best Go masters, it can’t extend that strategy beyond the board. Deep-learning algorithms, in other words, are masters at picking up patterns, but they cannot understand and adapt to a changing world. Read More

Daily Archives: March 15, 2021

Face editing with Generative Adversarial Networks

The Deep Bootstrap Framework: Good Online Learners are Good Offline Generalizers

We propose a new framework for reasoning about generalization in deep learning.The core idea is to couple the Real World, where optimizers take stochastic gradient steps on the empirical loss, to an Ideal World, where optimizers take steps on the population loss. This leads to an alternate decomposition of test error into: (1)the Ideal World test error plus (2) the gap between the two worlds. If the gap (2)is universally small, this reduces the problem of generalization in offline learning to the problem of optimization in online learning. We then give empirical evidence that this gap between worlds can be small in realistic deep learning settings,in particular supervised image classification. For example, CNNs generalize better than MLPs on image distributions in the Real World, but this is “because” they optimize faster on the population loss in the Ideal World. This suggests our frame-work is a useful tool for understanding generalization in deep learning, and lays a foundation for future research in the area. Read More

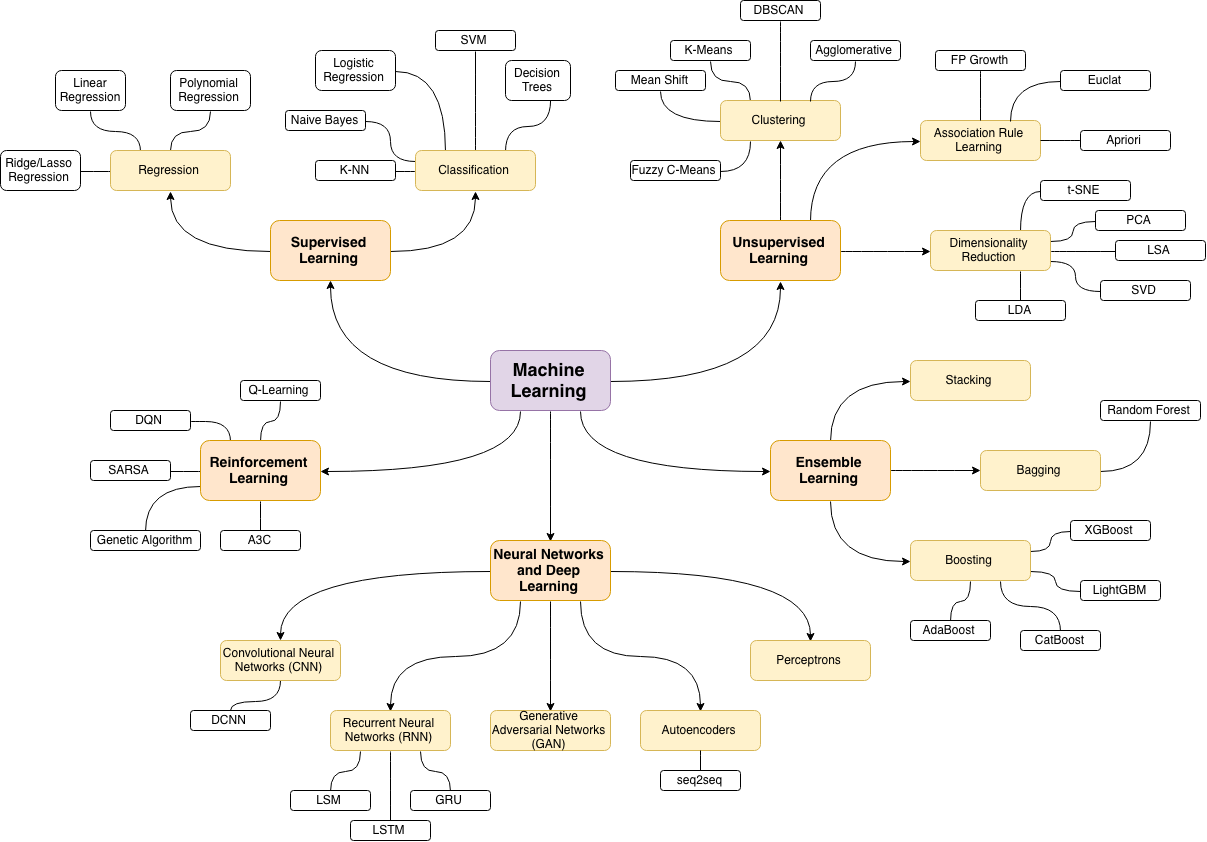

Homemade Machine Learning

The purpose of this repository is not to implement machine learning algorithms by using 3rd party library one-liners but rather to practice implementing these algorithms from scratch and get better understanding of the mathematics behind each algorithm. That’s why all algorithms implementations are called “homemade” and not intended to be used for production.

Adobe Photoshop uses AI to quadruple your photo’s size

Super resolution blows up a 12-megapixel smartphone photo into a much larger 48-megapixel shot. It’s coming to Lightroom soon, too.

Artificial intelligence leads NATO’s new strategy for emerging and disruptive tech

NATO and its member nations have formally agreed upon how the alliance should target and coordinate investments in emerging and disruptive technology, or EDT, with plans to release artificial intelligence and data strategies by the summer of 2021.

In recent years the alliance has publicly declared its need to focus on so-called EDTs, and identified seven science and technology areas that are of direct interest. Now, the NATO enterprise and representatives of its 30 member states have endorsed a strategy that shows how the alliance can both foster these technologies — through stronger relationships with innovation hubs and specific funding mechanisms — and protect EDT investments from outside influence and export issues. Read More

Multi-modal Self-Supervision from Generalized Data Transformations

The recent success of self-supervised learning can be largely attributed to content-preserving transformations, which can be used to easily induce invariances. While transformations generate positive sample pairs in contrastive loss training, most recent work focuses on developing new objective formulations, and pays rela-tively little attention to the transformations themselves. In this paper, we introduce the framework of Generalized Data Transformations to (1) reduce several recent self-supervised learning objectives to a single formulation for ease of comparison,analysis, and extension, (2) allow a choice between being invariant or distinctive to data transformations, obtaining different supervisory signals, and (3) derive the conditions that combinations of transformations must obey in order to lead to well-posed learning objectives. This framework allows both invariance and distinctiveness to be injected into representations simultaneously, and lets us systematically explore novel contrastive objectives. We apply it to study multi-modal self-supervision for audio-visual representation learning from unlabelled videos,improving the state-of-the-art by a large margin, and even surpassing supervised pretraining. We demonstrate results on a variety of downstream video and audio classification and retrieval tasks, on datasets such as HMDB-51, UCF-101,DCASE2014, ESC-50 and VGG-Sound. In particular, we achieve new state-of-the-art accuracies of 72.8% on HMDB-51 and 95.2% on UCF-101. Read More

#image-recognition, #self-supervisedFacebook’s next big AI project is training its machines on users’ public videos

AI that can understand video could be put to a variety of uses

Teaching AI systems to understand what’s happening in videos as completely as a human can is one of the hardest challenges — and biggest potential breakthroughs — in the world of machine learning. Today, Facebook announced a new initiative that it hopes will give it an edge in this consequential work: training its AI on Facebook users’ public videos.

Access to training data is one of the biggest competitive advantages in AI, and by collecting this resource from millions and millions of their users, tech giants like Facebook, Google, and Amazon have been able to forge ahead in various areas. And while Facebook has already trained machine vision models on billions of images collected from Instagram, it hasn’t previously announced projects of similar ambition for video understanding. Read More