SpeechBrain is an open-source and all-in-one speech toolkit based on PyTorch.

The goal is to create a single, flexible, and user-friendly toolkit that can be used to easily develop state-of-the-art speech technologies, including systems for speech recognition, speaker recognition, speech enhancement, multi-microphone signal processing and many others. Read More

Tag Archives: Python

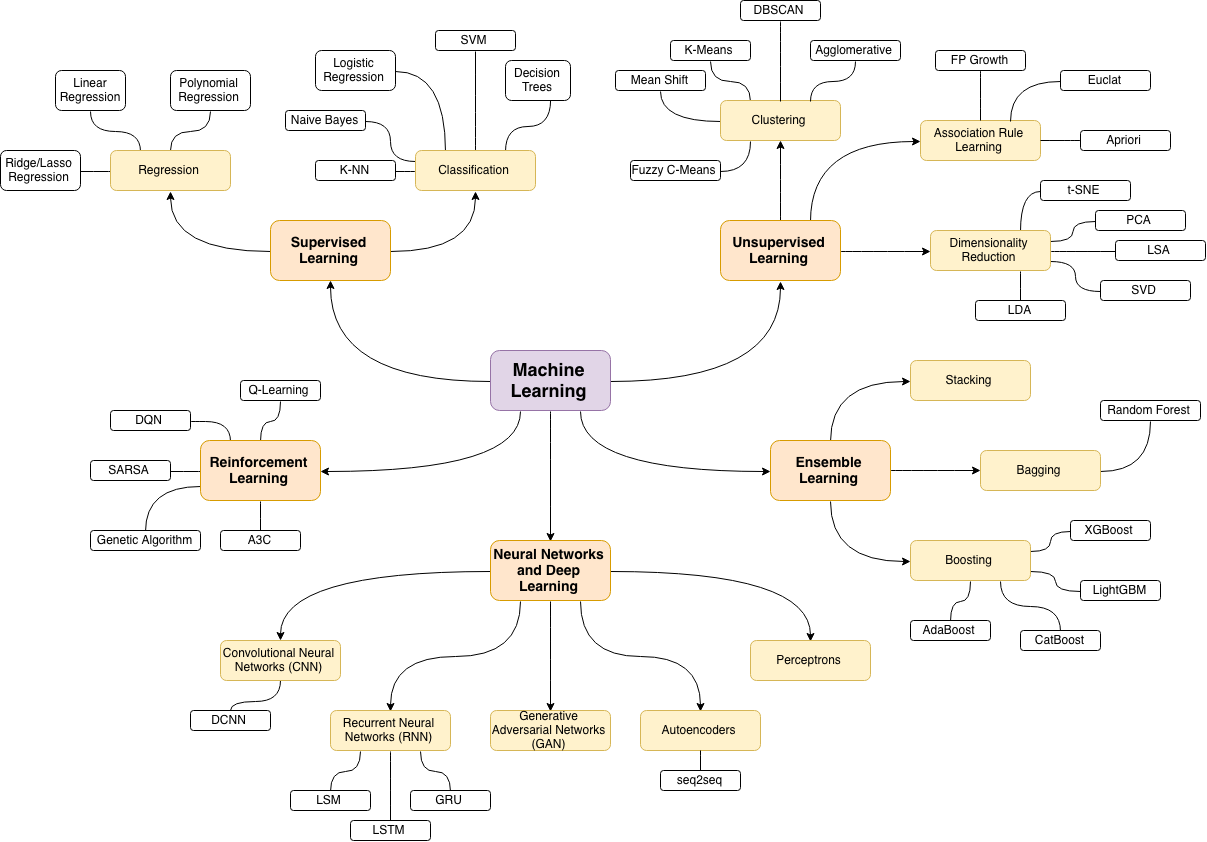

Homemade Machine Learning

The purpose of this repository is not to implement machine learning algorithms by using 3rd party library one-liners but rather to practice implementing these algorithms from scratch and get better understanding of the mathematics behind each algorithm. That’s why all algorithms implementations are called “homemade” and not intended to be used for production.

Build Your First Image Classifier With Convolutional Neural Network (CNN)

A Beginners Guide to CNN with TensorFlow

Convolutional Neural Network (CNN) is a type of deep neural network primarily used in image classification and computer vision applications. This article will guide you through creating your own image classification model by implementing CNN using the TensorFlow package in Python. Read More

Artificial Intelligence is a Supercomputing problem

The next generation of Artificial Intelligence applications impose new and demanding computing infrastructures. How are the computer systems that support artificial intelligence? How did we get here? Who has access to these systems? What is our responsibility as Artificial Intelligence practitioners?

[These posts will be used in the master course Supercomputers Architecture at UPC Barcelona Tech with the support of the BSC]

Part 1

Part 2

CrypTen – A Research Tool for Secure and Privacy – Preserving Machine Learning in Pytorch

Facebook’s Pytorch had created a huge buzz in the market when it was released five years ago. Now, it is not only the most preferred frameworks for Machine Learning and Deep Learning models but also one of the most powerful tools in research to develop new libraries and frameworks(like Huggingface, Fast.ai, etc). One of the most captivating libraries released by Facebook’s AI Research Lab(FAIR) is CrypTen – a tool for secure computation in ML. CrypTen is an open-source Python framework, built on Pytorch, to provide secure and privacy-preserving machine learning.

Crypten serves Secure Multiparty Computation as its secured computing backend and lessens the gap between ML researchers/developers and cryptography by facilitating Pytorch API’s to perform encryption techniques. Read More

Anatomy Of A Quantum Machine Learning Algorithm

What is a Variational Quantum-Classical Algorithm and why do we need it?

… Variational Quantum-Classical Algorithms have become a popular way to think about quantum algorithms for near-term quantum devices. In these algorithms, classical computers perform the overall machine learning task on information they acquire from running certain hard-to-compute calculations on a quantum computer.

The quantum algorithm produces information based on a set of parameters provided by the classical algorithm. Therefore, they are called Parameterized Quantum Circuits (PQCs). Read More

Get the book: Hands-On Quantum Machine Learning With Python.

8 reasons Python will rule the enterprise — and 8 reasons it won’t

The rise of Python will lead many enterprise managers to wonder whether it’s time to jump on the hype train. Let’s weigh the pros and cons.

There’s no question that Python is hugely popular with software developers, or that its popularity continues to rise. TIOBE, a software company that publishes a measure of the popularity of programming languages every month, reported in November that Python had climbed into the number two slot for the first time, passing Java.

… The rise of Python will lead many enterprise managers to wonder whether it’s time to jump on the hype train. To try to make sense of this impossible question, we’ve drawn up a list of eight reasons why joining the crowd is smart and eight other reasons why you might want to wait a few decades. Read More

Bird by Bird using Deep Learning

This article demonstrates how deep learning models used for image-related tasks can be advanced in order to address the fine-grained classification problem. For this objective, we will walk through the following two parts. First, you will get familiar with some basic concepts of computer vision and convolutional neural networks, while the second part demonstrates how to apply this knowledge to a real-world problem of bird species classification using PyTorch. Specifically, you will learn how to build your own CNN model – ResNet-50, – to further improve its performance using transfer learning, auxiliary task and attention-enhanced architecture, and even a little more. Read More

Introduction to Linear Algebra for Applied Machine Learning with Python

Linear algebra is to machine learning as flour to bakery: every machine learning model is based in linear algebra, as every cake is based in flour. It is not the only ingredient, of course. Machine learning models need vector calculus, probability, and optimization, as cakes need sugar, eggs, and butter. Applied machine learning, like bakery, is essentially about combining these mathematical ingredients in clever ways to create useful (tasty?) models.

This document contains introductory level linear algebra notes for applied machine learning. It is meant as a reference rather than a comprehensive review. … The notes are based on a series of (mostly) freely available textbooks, video lectures, and classes I’ve read, watched and taken in the past. If you want to obtain a deeper understanding or to find exercises for each topic, you may want to consult those sources directly. Read More

7 popular activation functions you should know in Deep Learning and how to use them with Keras and TensorFlow 2

In artificial neural networks (ANNs), the activation function is a mathematical “gate” in between the input feeding the current neuron and its output going to the next layer [1].

The activation functions are at the very core of Deep Learning. They determine the output of a model, its accuracy, and computational efficiency. In some cases, activation functions have a major effect on the model’s ability to converge and the convergence speed.

In this article, you’ll learn seven of themost popular activation functions in Deep Learning — Sigmoid, Tanh, ReLU, Leaky ReLU, PReLU, ELU, and SELU — and how to use them with Keras and TensorFlow 2. Read More